Deep Learning

Neuron

Activation (출력, 활성함수)

. 계단함수

- 시그모이드 : 이진분류시 출력 레이어에 사용

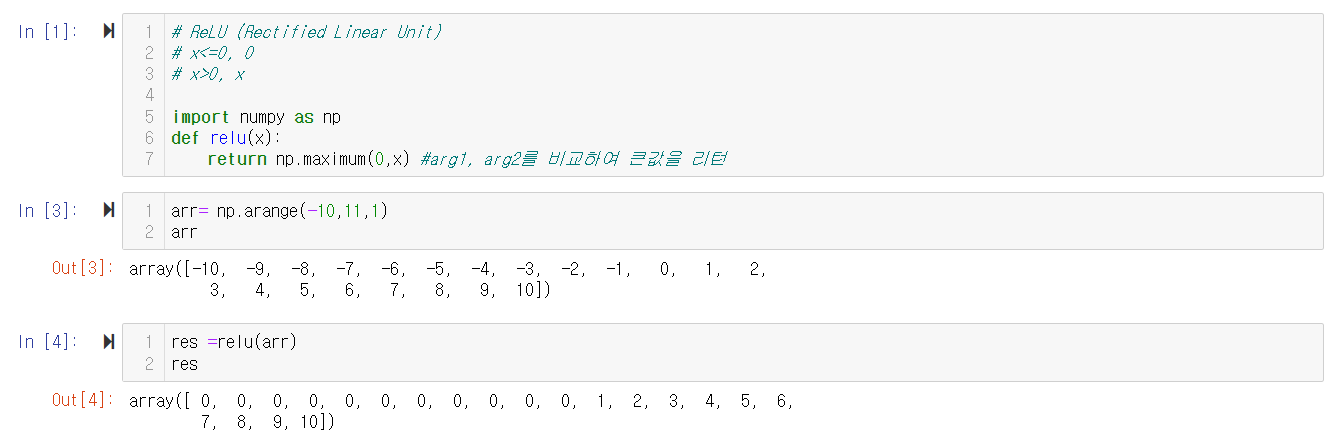

- ReLU(Rectified Linear Unit) : 가장 많이 활용되는 출력함수 x<=0, ->0 , x>0 -> x

- Softmax : 다중분류에서 활용

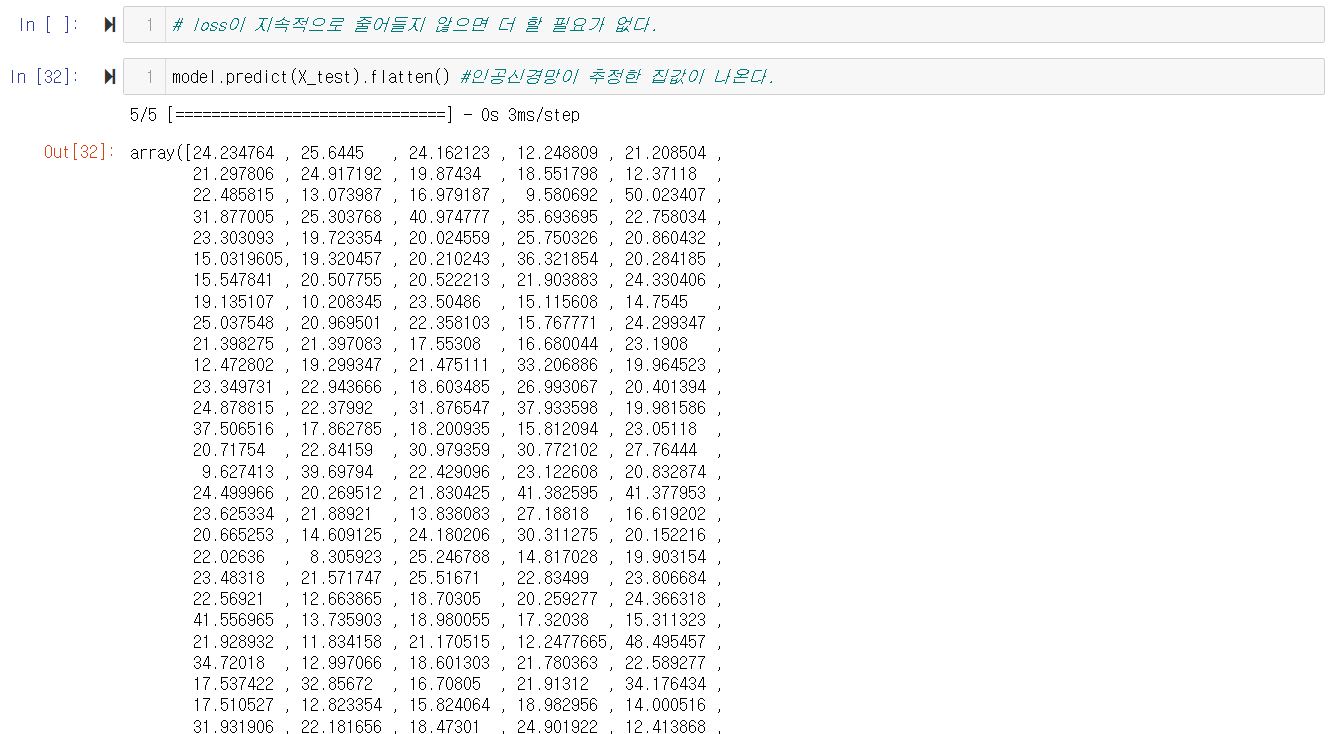

Loss (손실함수) : Label, Prediction(추정값)

- MSE, MAE, Binary_CrossEntropy, CrossEntrop Gradient Descentu)

- 경사하강법의 다양한 파생 알고리즘(Optimizer)

- sGD(Stochastic Gradient Descent, 확률적 경사하강법)

- Adam

Backprop agation(오차 역전파)

편향치, 가중치

오버 피팅 overfitting (과적합 :지나치게 학습하여 실제 데이터에 노출됐을 때 사고를 못함)

언더 피팅 underfitting (과소적합 : 너무 적게 학습한 경우)

댓글